seismic Facies analysis

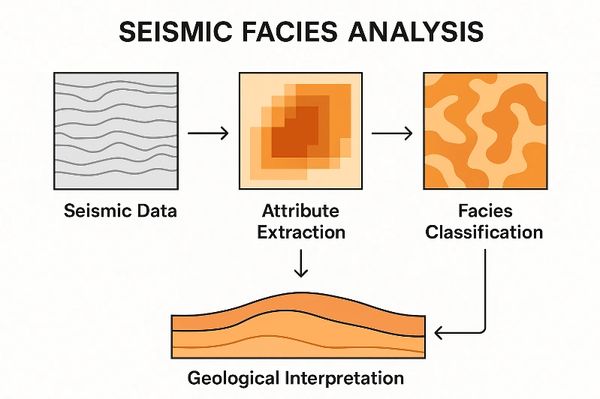

Seismic facies analysis is a key step in seismic interpretation and reservoir characterization. It involves dividing a seismic volume (or section) into areas or intervals that show similar reflection characteristics, which are then interpreted in terms of depositional environment, lithology, and fluid content.

Seismic facies analysis is the interpretation of groups of seismic reflections based on their geometry, amplitude, continuity, frequency, and configuration, to infer the geological meaning such as sedimentary environment or rock type.

Key Seismic Attributes Used

Seismic facies are typically recognized by patterns in:

- Amplitude – strength of reflection (indicates acoustic impedance contrasts).

- Continuity – degree of lateral persistence of reflectors (related to depositional uniformity).

- Frequency / bandwidth – sharpness or smoothness of reflections (related to layer thickness and lithologic variability).

- Configuration / geometry – reflection terminations (onlap, downlap, truncation) and shapes of reflectors (parallel, chaotic, hummocky, etc.).

- Phase – can indicate polarity or tuning effects.

Types of Seismic Facies Analysis

1. Qualitative (visual) analysis

- Done by the interpreter visually examining seismic sections or time slices.

- Based on classical criteria defined by Mitchum et al. (1977) in seismic stratigraphy.

2. Quantitative (attribute-based) analysis

- Uses seismic attributes (e.g., RMS amplitude, instantaneous frequency, similarity) or machine learning techniques (e.g., PCA, SOM, K-means) to classify seismic facies automatically.

- Provides facies maps that can be correlated with well data.

Workflow

Typical workflow for seismic facies analysis:

- Define objective (e.g., reservoir delineation, channel detection).

- Select seismic interval (horizon or volume).

- Compute relevant seismic attributes.

- Apply classification or clustering techniques (manual or automatic).

- Interpret facies in geological terms (e.g., channel sand, shale, reef, etc.).

- Validate with well logs, core data, or known geology.

Geological Significance

Seismic facies interpretation helps geoscientists to:

- Identify depositional environments (fluvial, deltaic, turbiditic, reefal, etc.).

- Map reservoir distribution and quality.

- Detect stratigraphic traps or unconformities.

- Support 3D reservoir modeling.

Seismic Quantitative Facies Analysis

Seismic Quantitative Facies Analysis is the data-driven or numerical extension of traditional (qualitative) seismic facies interpretation.

Instead of relying only on visual inspection of reflection patterns, it uses measurable seismic attributes and statistical or machine learning methods to classify and predict facies objectively.

Quantitative seismic facies analysis involves the use of multi-attribute data, pattern recognition, and classification algorithms to automatically or semi-automatically define facies classes that reflect lithological or depositional variations.

It turns qualitative observations (like “high amplitude = sand”) into numerically defined relationships between seismic responses and rock properties.

Main Inputs

1. Seismic attributes — e.g.

- Amplitude-related: RMS amplitude, average energy.

- Frequency-related: instantaneous frequency, bandwidth

- Continuity-related: semblance, coherence

- Morphologic: curvature, dip

2. Well data — facies logs, lithology, porosity, etc.

3. Machine learning methods — for clustering or classification.

Workflow

- Select seismic interval or horizon.

- Extract multiple attributes from seismic data.

- Normalize and reduce dimensionality (e.g., PCA).

- Apply classification (supervised or unsupervised).

- Validate with well facies logs.

- Generate 3D facies volume or maps.

- Interpret in geological terms (e.g., channel sands, shales, carbonates).

Advantages

- Objective, repeatable, and data-driven.

- Captures subtle seismic variations not visible to the eye.

- Integrates seismic and well information.

- Essential for reservoir modeling and facies prediction.

Two Major Approaches

1. Unsupervised facies classification

- No prior knowledge from wells.

- Groups data based on attribute similarity.

- Techniques:

- Principal Component Analysis (PCA) for dimensionality reduction.

- K-means clustering

- Self-Organizing Maps (SOM, Kohonen networks)

🟠 Goal: Detect hidden patterns and classify seismic volumes into facies automatically.

2. Supervised facies classification

- Uses training data from wells (known facies labels).

- Builds a predictive model between seismic attributes and known facies.

- Techniques:

- Bayesian classification

- Support Vector Machines (SVM)

- Artificial Neural Networks (ANN)

- Random Forests

🟢 Goal: Predict facies away from wells, providing 3D facies maps.

Example

Suppose you compute RMS amplitude, instantaneous frequency, and coherence from a 3D seismic volume.

Using SOM clustering, the data are divided into facies clusters.

Then, by comparing clusters with well facies logs, you can interpret:

- Facies 1 → clean sandstone

- Facies 2 → shaly sand

- Facies 3 → marine shale

Principal Component Analysis (PCA)

PCA is a statistical technique that reduces a large set of correlated variables (attributes) into a smaller set of uncorrelated variables called principal components (PCs). Each principal component is a linear combination of the original attributes. The first few components usually explain most of the variability in the data.

In seismic studies, we may have dozens to hundreds of attributes (amplitude, frequency, coherence, curvature, etc.). Many are redundant (e.g., RMS amplitude, reflection strength, and envelope all measure signal energy). PCA helps by:

- Removing redundancy.

- Reducing dimensionality.

- Highlighting the most significant variations in the data.

- Creating composite attributes that better separate geological features.

How PCA Works (Step by Step)

1. Standardize the data

- Normalize each attribute so they have comparable scales.

- Example: RMS amplitude values might range in 100s, while coherence is between 0–1.

2. Compute the covariance matrix

- Measures how attributes vary with each other.

3. Calculate eigenvalues and eigenvectors

- Eigenvectors = direction of maximum variance (new attribute axes).

- Eigenvalues = amount of variance explained by each axis.

4. Rank the components

- PC1 (first principal component) explains the maximum variance.

- PC2 explains the next largest variance (orthogonal to PC1).

- PC3, PC4, etc., explain progressively less.

5. Project data into new space

- The seismic attributes are transformed into a smaller set of PCs.

Practical Example in Seismic

- Suppose you extract 10 seismic attributes around a channel system.

- PCA shows:

- PC1 = mostly amplitude-based attributes (RMS, reflection strength, envelope).

- PC2 = mostly geometric attributes (coherence, curvature).

- PC3 = mostly spectral attributes (dominant frequency, spectral energy).

- Instead of interpreting 10 maps, you now interpret 3 principal components that capture the essential information.

Advantages of PCA

- Reduces data size while preserving most information.

- Removes redundancy between correlated attributes.

- Helps identify hidden patterns in attribute space.

- Often used as a preprocessing step before clustering or machine learning.

Limitations

- PCA is linear → may miss non-linear relationships.

- PCs are mathematical combinations of attributes, not always directly interpretable geologically.

- Requires careful scaling and validation with well data.

PCA ANALYSIS ANALYSIS on real data

PCA analysis base on 8 seismic attributes: instantaneous amp, average energy, reflection strength, instantaneous phase, instantaneous frequency, dominant frequency, spectral energy and semblance. Three principle components with their correlation would be observed in three panels.

k-means clustering Analysis

K-means clustering facies analysis is an unsupervised machine learning method used in geophysics and geology to automatically classify seismic or well log data into distinct lithofacies or seismic facies based on their similarities in selected attributes. K-means is an unsupervised learning algorithm that partitions a dataset into K groups (clusters) such that each data point belongs to the cluster with the nearest mean (centroid). It minimizes the within-cluster variance, i.e. it makes each cluster as compact and distinct as possible.

🪨 Application to Facies Analysis

In facies analysis, the goal is to group parts of the subsurface (seismic traces or well log intervals) that share similar properties, such as:

- Seismic attributes (amplitude, frequency, phase, semblance, coherency, etc.)

- Well log features (gamma ray, density, neutron, sonic, resistivity, etc.)

- Derived features (inversion results, AVO attributes, etc.)

These groups are interpreted as facies, which can correspond to lithological, depositional, or structural differences.

⚙️ Typical Workflow

1. Select Input Attributes:

Choose meaningful features that represent subsurface variability (e.g. RMS amplitude, instantaneous frequency, or GR + RHOB logs).

2. Normalize Data:

Scale all features to have similar ranges (important because K-means is distance-based).

3. Choose Number of Clusters (K):

Can be guided by domain knowledge or methods like the Elbow method or Silhouette score.

4. Apply K-means Algorithm:

- Randomly assign K centroids.

- Assign each data point to the nearest centroid.

- Update centroids as the mean of points in each cluster.

- Iterate until centroids stabilize.

5. Interpret Clusters:

Map each cluster to a facies class — often validated using core or well data.

6. Visualize Results:

Display facies maps, cross-sections, or 3D volumes showing different facies regions.

Practical Example

- Seismic Facies Classification:

Cluster seismic attributes (e.g., amplitude, phase, frequency) to reveal depositional patterns. - Log-based Lithofacies:

Cluster logs (e.g., GR, NPHI, RHOB) to identify lithologies (e.g., sand, shale, limestone).

Advantages of K-means Clustering

- No prior labels needed (unsupervised).

- Simple and fast to compute.

- Works well with multi-attribute data.

Limitations

- You must specify K manually.

- Sensitive to initial centroid placement.

- Assumes clusters are roughly spherical and equally sized.

- Results depend on attribute scaling.

K-MEANS CLUSTERING ANALYSIS on real data

k-means clustering analysis base on 8 seismic attributes: instantaneous amp, average energy, reflection strength, instantaneous phase, instantaneous frequency, dominant frequency, spectral energy and semblance. Three classes would be recognized by k-means clustering relevant to different lithological types.

Self-Organizing Maps Analysis (SOM)

A Self-Organizing Map (SOM) is a type of artificial neural network developed by Teuvo Kohonen that projects high-dimensional data onto a 2D grid while preserving the topological relationships (i.e., points that are similar stay close together on the map). SOM reduces complex multi-attribute data into a 2D “map” where similar data points are grouped together and this helps reveal hidden patterns and facies clusters.

In facies analysis, the SOM is used to automatically cluster well-log or seismic attribute data into facies classes (lithological or seismic). The key strength is that SOM learns nonlinear relationships and high-dimensional feature patterns, something K-means can’t easily capture.

⚙️ Typical Workflow

1. Input Data Selection

Choose relevant features:

- Well-log data: GR, NPHI, RHOB, DT, Rt, etc.

- Seismic data: amplitude, frequency, phase, coherency, curvature, etc.

2. Data Normalization

Scale features (usually 0–1 or z-score).

SOM is sensitive to magnitude differences.

3. Define the SOM Grid

A 2D lattice (e.g. 10×10 neurons) — each node (neuron) will represent one “prototype” pattern of the data.

4. Training

Each data vector is compared to all neurons.

- The Best Matching Unit (BMU) (the neuron most similar to the data vector) and its neighbors are updated to become more like the input.

- Over many iterations, the map “self-organizes” — similar data points end up close together on the 2D grid.

5. Clustering / Facies Identification

After training, neurons are grouped (manually or via another algorithm like K-means) into facies classes.

These clusters are then mapped spatially or along wells.

6. Interpretation

Interpret each cluster as a distinct facies, based on geological knowledge or core calibration.

Practical Example

- Seismic Facies Classification:

Apply SOM to multiple seismic attributes → visualize a 2D map → identify depositional features like channels, reefs, or fan systems. - Well Log Facies Analysis:

Combine GR, RHOB, NPHI, and DT logs → SOM clusters → lithofacies or reservoir quality zones.

Advantages of SOM

- ✅ Captures nonlinear relationships

✅ Preserves topological proximity (helps visualize geological trends)

✅ Handles multi-attribute seismic data naturally

✅ Produces color-coded 2D maps — very intuitive for interpreters

Typical Output Visualization

- SOM component planes: show how each input attribute varies across the map.

- U-matrix: visualizes distance between neurons (revealing cluster boundaries).

- Facies map: each SOM cluster shown in different color across seismic or well sections.

SELF-ORGANIZING MAPS ANALYSIS on real data

Self-Organizing Maps analysis base on 8 seismic attributes: instantaneous amp, average energy, reflection strength, instantaneous phase, instantaneous frequency, dominant frequency, spectral energy and semblance. Three classes would be recognized by Self-Organizing Maps relevant to different lithological types.

supervised facies classification

Supervised facies classification means: We train a model using known facies labels from wells (supervision) and predict facies across the seismic volume using seismic attributes (inputs). So, we already know the facies types at the well locations (from core or log interpretation), and we want to propagate those facies away from the wells using seismic attributes.

⚙️ 5️⃣ Common supervised methods

==================================================================================================================

Method Description Strengths

======================================================================================================================

Linear Discriminant Analysis (LDA) Assumes linear separation between facies. Simple and interpretable.

Quadratic Discriminant Analysis (QDA) Allows curved boundaries. Good for overlapping facies.

k-Nearest Neighbors (KNN) Classifies based on nearby training points Non-parametric.

Support Vector Machine (SVM) Finds optimal separating boundaries. Robust, widely used.

Random Forest (RF) Ensemble of decision trees. Hndles non-linearity, good accuracy

Neural Networks (NN) Deep nonlinear mapping Needs more data but powerful.

🧠 1️⃣ SVM — Support Vector Machine

Type: Boundary-based classifier (geometrical / hyperplane)

🔹 Concept:

SVM finds an optimal separating boundary (hyperplane) between facies classes in the attribute space (e.g., PCA or seismic attributes). It tries to maximize the margin between classes i.e., the distance between the closest points (called support vectors).

🔹 In facies classification:

- SVM works very well when the classes are well-separated in attribute space.

- You can use non-linear kernels (RBF, polynomial) to model curved decision boundaries.

🔹 Advantages:

- Excellent performance in high-dimensional attribute spaces (like PCA).

- Handles non-linear separations using kernels.

- Usually robust to small training datasets (few wells).

🔹 Limitations:

- Computationally expensive for large seismic volumes.

- Doesn’t provide probability outputs easily.

🌳 2️⃣ Decision Tree (Tree-based Classifier)

Type: Rule-based (hierarchical partitioning)

🔹 Concept:

A decision tree splits the attribute space step-by-step based on conditions like: Each node represents a question; each branch a decision; leaves represent predicted facies.

🔹 In facies classification:

The tree automatically finds thresholds in seismic attributes that best separate facies.

🔹 Advantages:

- Easy to interpret (geologists can trace attribute thresholds).

- Fast training and prediction.

- Works with non-linear and non-normally distributed data.

🔹 Limitations:

- Individual trees may overfit training data.

- Sensitive to noise — often used inside an ensemble for stability.

👥 3️⃣ K-Nearest Neighbors (KNN)

Type: Instance-based (distance-based local method)

🔹 Concept:

KNN does no explicit training. It classifies each new sample based on the K closest samples in attribute space.

🔹 In facies classification:

KNN is ideal for capturing local variability in seismic attributes and subtle facies transitions.

🔹 Advantages:

- Simple and intuitive.

- Captures nonlinear and irregular facies boundaries.

- Works well when classes overlap or have complex shapes.

🔹 Limitations:

- Computationally heavy on large data (requires comparing every new sample).

- Sensitive to attribute scaling → normalization is essential.

- “K” must be tuned (too small → noisy; too large → over-smoothed).

🧩 4️⃣ Ensemble (Bagging / Random Forest)

Type: Multiple-model voting (ensemble learning)

🔹 Concept:

Instead of one tree, many decision trees are trained on random subsets of data and attributes. Each tree votes for a facies class, and the majority vote gives the final prediction. This is what we call Bagging (Bootstrap Aggregation) and when random subsets of features are also used, it becomes a Random Forest.

🔹 In facies classification:

Ensembles are extremely robust to noise and variability in seismic attributes. They can capture complex non-linear facies patterns without overfitting.

🔹 Advantages:

- High accuracy and stability.

- Can handle mixed-scale attributes easily.

- Provides class probabilities and variable importance (which attributes matter most).

🔹 Limitations:

- Less interpretable than a single tree.

- Slightly slower to train.

KNN-Supervised ANALYSIS on real data

KNN-Supervised analysis base on 8 seismic attributes: instantaneous amp, average energy, reflection strength, instantaneous phase, instantaneous frequency, dominant frequency, spectral energy and semblance.

This website uses cookies.

We use cookies to analyze website traffic and optimize your website experience. By accepting our use of cookies, your data will be aggregated with all other user data.